Gradient Descent Machine Learning Github

J_history zerosnum_iters 1. W k w k 1 α g w k 1 4.

Intuition Of Gradient Descent For Machine Learning Abdullah Al Imran

So differentiation of cost function npdotxsself_thetas - ys2 with respect to self_thetas will give dw2 npdotxsself_thetas - ysxs for M examples we have dw 2 npdotxsself_thetas - ysxs2M 2diffsxs2M diffsxsM ie npdotxs_transposed diffsnum_examples.

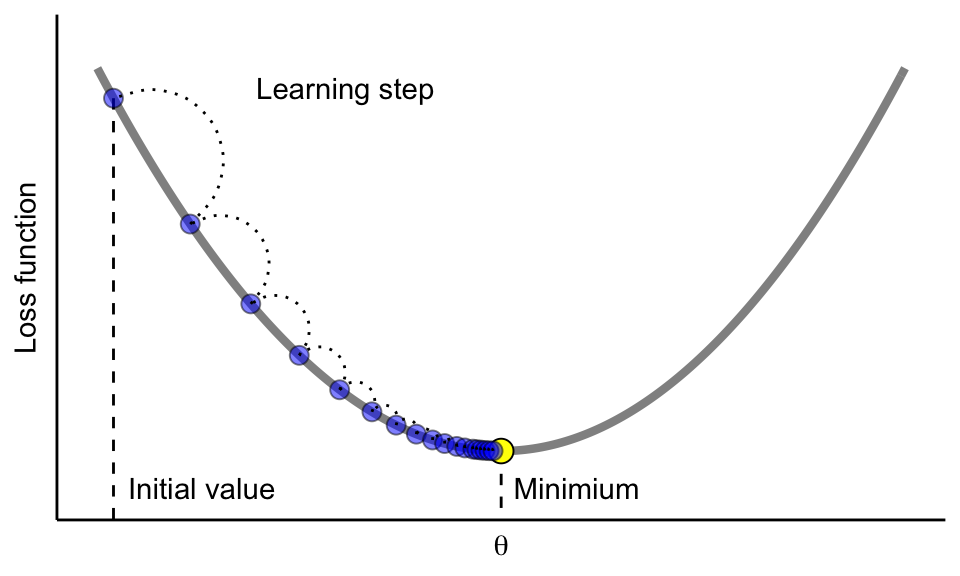

Gradient descent machine learning github. It takes steps proportional to the negative of the gradient to find the local minimum of a function. GRADIENTDESCENT Performs gradient descent to learn theta theta GRADIENTDESENTX y theta alpha num_iters updates theta by taking num_iters gradient steps with learning rate alpha Initialize some useful values. Number of training examples.

This is part 3 of my post on Linear Models. Parameters refer to coefficients in Linear Regression and weights in neural networks. For k 1.

According to Aurélien Gérons book Hands on Machine Learning with Scikit-Learn TensorFlow great book for who is starting. However I believe that to excel in a field you should understand the tools you are using. The gradient descent algorithm.

In part 1 I had discussed Linear Regression and Gradient Descent and in part 2 I had discussed Logistic Regression and their implementations in Python. Function g steplength α maximum number of steps K and initial point w 0. N float len x_val for i in range 0 len x_val.

Python machine learning applications in image processing and algorithm implementations including Dawid-Skene Platt-Burges Expectation Maximization Factor Analysis Gaussian Mixture Model OPTICS DBSCAN Random Forest Decision Tree Support Vector Machine Independent Component Analysis Latent Semantic Indexing Principal Component Analysis Singular Value Decomposition K. Number of training examples. GRADIENTDESCENTMULTI Performs gradient descent to learn theta theta GRADIENTDESCENTMULTIx y theta alpha num_iters updates theta by taking num_iters gradient steps with learning rate alpha Initialize some useful values.

In machine learning we use gradient descent to update the parameters of our model. Implement gradient descent in your. Contribute to saheelbMachine-learning-algorithm-PDF development by creating an account on GitHub.

For iter 1num_iters. At the same time it is one of the easiest to understand. For iter 1num_iters.

Gradient is derivate. History of weights w k k 0 K and corresponding function evaluations g w k k 0 K. In machine learning the number of observations is often very high as well as the number of predictors.

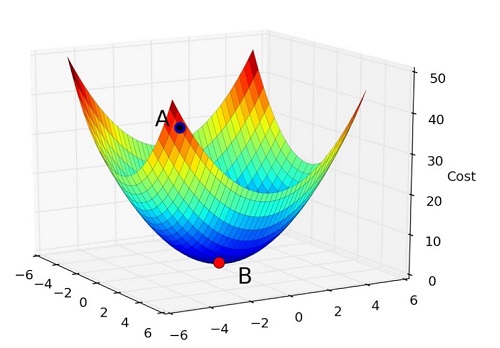

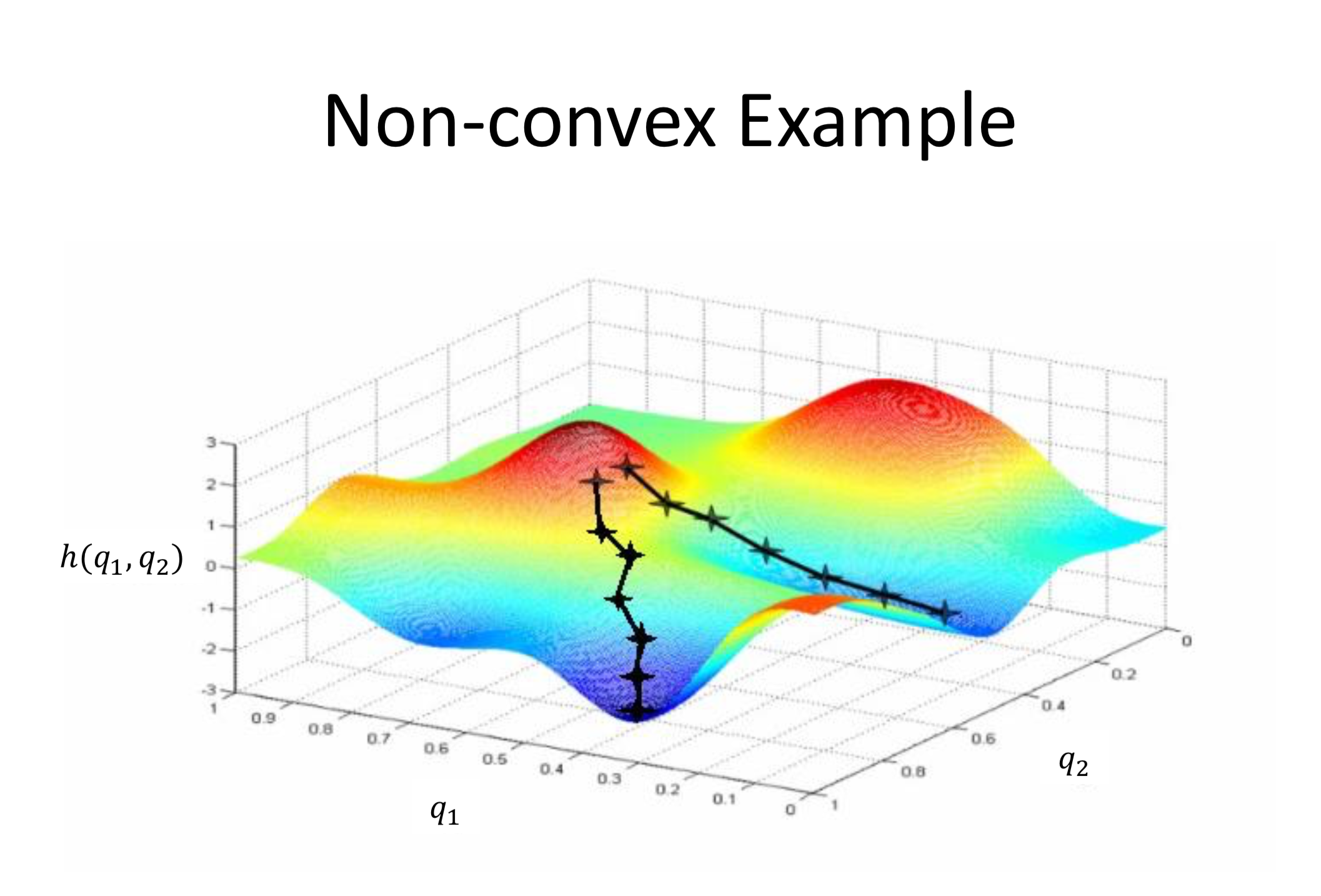

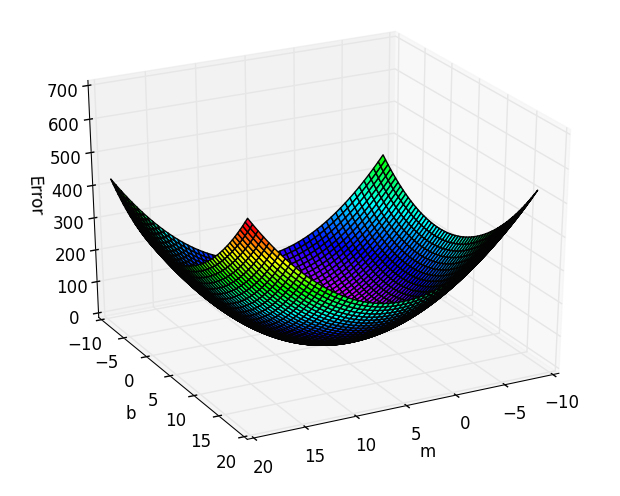

Gradient Descent uses that hypothesized theta array sees how far off it is using the cost function tweaks the values in the theta array in the direction indicated by the partial derivatives and by the amount of the learning rate and tries to come up with an answer that better fits all the data points. GRADIENTDESCENT Performs gradient descent to learn theta theta GRADIENTDESENTX y theta alpha num_iters. The following 3D figure shows an example of gradient descent.

Machine-learning-algorithm-PDF 11 Gradient_descentpdf Go. J_history zerosnum_iters 1. Contribute to ahawkermachine-learning-coursera development by creating an account on GitHub.

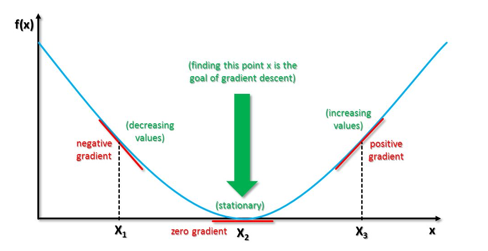

Gradient descent is an optimization algorithm for finding the minimum of a function. In fact most modern machine learning libraries have gradient descent built-in. Gradient descent tries to find one of the local minima.

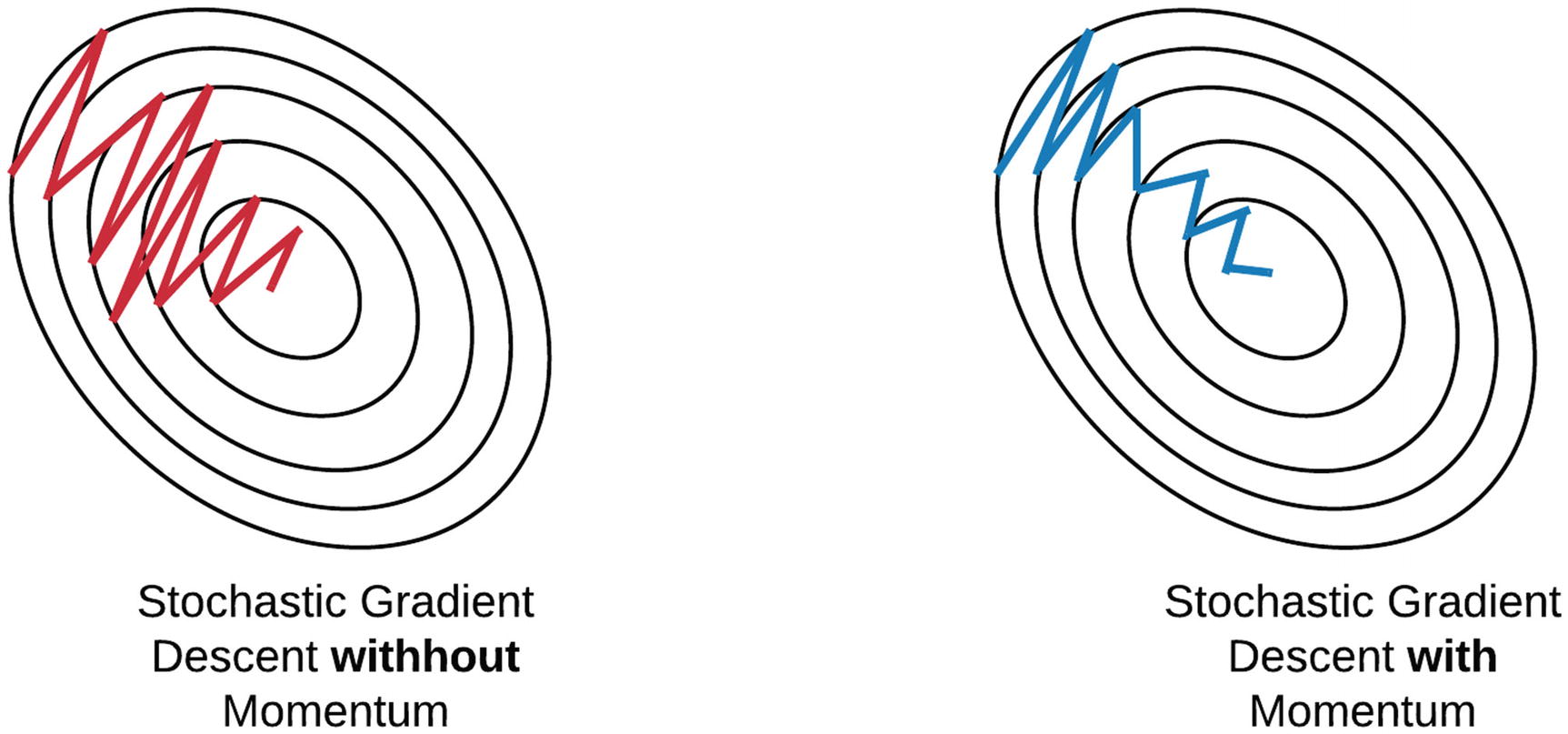

Gradient descentis probably one of the most widely used algorithm in Machine Learningand Deep Learning. Gradient Descent is a very generic optimization algorithm capable of finding optimal solutions to a wide range of problems. Consequently this operation is very expensive in terms of calculation and memory.

Gradient descent algorithm is an iterative optimization algorithm that allows us to find the solution while keeping the computational complexity low. DefinitionGradient descent is an optimization algorithm used to minimize some function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient. Def step_gradient b_current m_current x_val y_val learning_rate.

Number of training. Theta1 and theta0 are the two paramters. Machine Learning Spring 2014.

In this post I will discuss Support Vector Machines Linear and its implementation using Gradient Descent. X x_val i y y_val i b_gradient -2 N y-m_current x b_current m_gradient -2 N x y-m_current x b_current new_b b_current-learning_rate b_gradient new_m m_current-learning_rate m_gradient. GRADIENTDESCENT Performs gradient descent to learn theta theta GRADIENTDESENTX y theta alpha num_iters updates theta by taking num_iters gradient steps with learning rate alpha Initialize some useful values.

Gradient Descent Github Topics Github

Gradient Descent And Stochastic Gradient Descent Mlxtend

Gradient Descent Statistics And Machine Learning In Python 0 5 Documentation

Gradient Descent Statistics And Machine Learning In Python 0 5 Documentation

Github Dshahid380 Gradient Descent Algorithm Gradient Descent Algorithm Implement Using Python And Numpy Mathematical Implementation Of Gradient Descent

Chapter 12 Gradient Boosting Hands On Machine Learning With R

Gradient Descent Algorithm And Its Variants Imad Dabbura

Stochastic Gradient Descent For Machine Learning Clearly Explained Baptiste Monpezat

Florian Schafer Competitive Gradient Descent

Final Report Parallelizing Gradient Descent

Stochastic Gradient Descent For Machine Learning Clearly Explained Baptiste Monpezat

Github Dshahid380 Gradient Descent Algorithm Gradient Descent Algorithm Implement Using Python And Numpy Mathematical Implementation Of Gradient Descent

A Brief Introduction To Gradient Descent Alykhantejani Com

Stochastic Gradient Descent Github Topics Github

Stochastic Gradient Descent On Your Microcontroller

A Brief Introduction To Gradient Descent Alykhantejani Com

Neural Networks In 100 Lines Of Pure Python

Post a Comment for "Gradient Descent Machine Learning Github"