Machine Learning High Training Accuracy And Low Test Accuracy

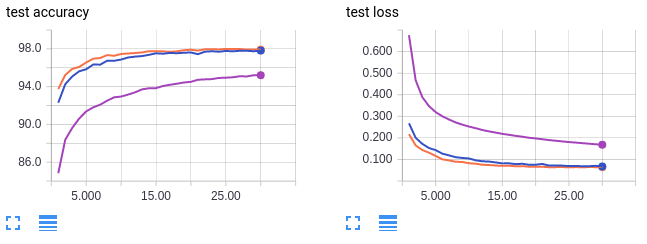

Each class has 7 samples. Training accuracy is eventually going up while validation accuracy never exceed 70.

Jpt Machine Learning Based Early Warning System Maintains Stable Production Machine Learning Learning Methods Machine Learning Methods

I suggest Bias and Variance and Learning curves parts of Machine Learning Yearning - Andrew Ng.

Machine learning high training accuracy and low test accuracy. The hypothesis function you are using is too complex that your model perfectly fits the training data but fails to do on testvalidation data. From keraspreprocessingimage import ImageDataGenerator. Im getting an training accuracy of 9997.

The reasons for this can be as follows. You build the model with training data and validate with the test data. The training model showed 72 accuracy and the test results showed 68.

I used multiple times k-folds and controlled for the uneven models with stratified samples between training and test and in the folds. If your model has low error in the training set but high error in the test set this is indicative of High Variance as your model has failed to generalize to the second set of data. Following is my code very simple.

From kerasmodels import Sequential from keraslayers import Convolution2D MaxPooling2D. High Training Accuracy 999 and low Testing accuracy 411 Im using 3 layer CNN with 8 16 and 32 filters each of size 5 X 5. I am trying to classify 2 classes of images.

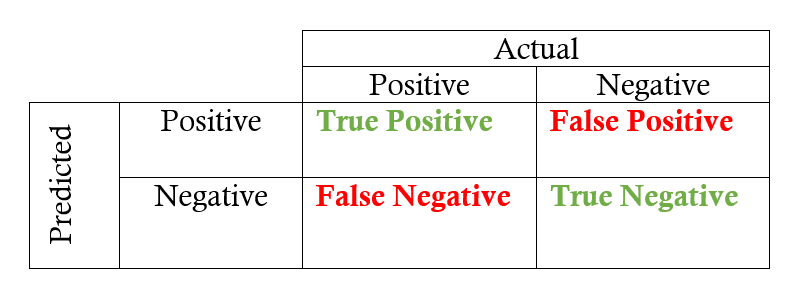

Errors normally get worse between training and test but your dramatic shift from 100 accuracy on training to 40 accuracy on test is a large gap. Testing accuracy of 4111. Here accuracy is used in a broad sense it can be replaced with F1 AUC error increase becomes decrease higher becomes lower etc.

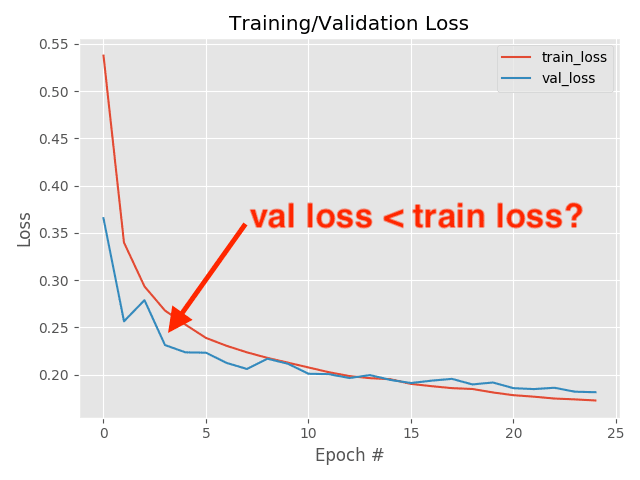

A training accuracy that is subjectively far higher than test accuracy indicates over-fitting. This highlights an important application scenario where one trains a. You train your model on the loss function so this is the most direct performance measure.

The solution here is to use 50 of the data to train on and 50 to evaluate the model. In this ne-tuning task 16-bit HALP achieves a 032 higher test accuracy when compared to 16-bit SGD and closely matches the test accuracy from running 32-bit SGD from scratch. The accuracy is simply how good your machine learning model is at predicting a correct class for a given observation.

I got a strange question. If the test and validation set are sampled from the same process and are sufficiently large they are interchangeable. If the accuracy is only loosely coupled to your loss function and the test loss is approximately as low as the validation loss it might explain the accuracy gap.

Imagine if youre using 99 of the data to train and 1 for test then obviously testing set accuracy will be better than the testing set 99 times out of 100. This is exactly what we expected. If you can generate a model with overall low error in both your train past and test future datasets youll have found a model that is Just Right and balanced the right levels of bias and variance.

It presents plots and interpretations for all the. What are the scenarios which have lower training accuracy as well as low test accuracy termed. Bug in the code.

The accuracy is simply how good your machine learning model is at predicting a correct class for a given observation. AOverfitting bHigh Variance cLow Bias dHigh Bias. Vidyatshetty changed the title High accuracy on training data 100 and low accuracy on test data set 60 High accuracy on training data 98 and low accuracy on test data set 60 on Mar 16 2018.

I think I simplified enough the architecture applied enough dropout because my network is even too dumb to learn anything and return random results 3-classes classifier 33 is random accuracy even on training dataset. When creating the train and test datasets correctly the classifiers mean accuracy drops from 093 to 053. If you dig a little bit you will find out spoiler alert that the test data contained 86 of the rows with the.

But I always reach similar results. Though I am getting high train and validation accuracy 097 after 10 epochs my test results are awful precision 048 and the confusion matrix shows the network is predicting the images for the wrong class attached results. I also used the 1SE less than optimal as the choice for model to protect against overfitting.

I built a model using 80 training and 20 test. In essence your model has learned particulars that help it perform better in your training data that are not applicable to the larger data population and therefore result in worse performance. You build the model with training data and validate it with the test data.

I train a two layers CNN using flow_from_directory the training accuracy is very high while the validation accuracy is very low. What are the scenarios which have higher training accuracy and low test accuracy called. 9 When a machine learning model has high training accuracy and very low validation then this case is probably known as over-fitting.

How to interpret a test accuracy higher than training set accuracy. By definition when training accuracy or whatever metric you are using is higher than your testing you have an overfit model. This means that the test.

Your model has effectively memorised the exact input and output pairs in the training set and in order to do so has constructed an over-complex decision surface that guarantees correct classification of each training example. Most likely culprit is your traintest split percentage. That 16-bit HALP can improve the test accuracy of a checkpointed model trained using 16-bit SGD.

A Comprehensive Hands On Guide To Transfer Learning With Real World Applications In Deep Learning By Dipanjan Dj Sarkar Towards Data Science

How To Interpret The Neural Network Model When Validation Accuracy Oscillates For Each Epoch

When Can Validation Accuracy Be Greater Than Training Accuracy For Deep Learning Models

What Is A Learning Curve In Machine Learning Stack Overflow

Underfitting And Overfitting In Machine Learning

How Is It Possible That Validation Loss Is Increasing While Validation Accuracy Is Increasing As Well Cross Validated

Effect Of Batch Size On Training Dynamics By Kevin Shen Mini Distill Medium

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

How To Interpret Loss And Accuracy For A Machine Learning Model Stack Overflow

What Does It Mean When Train And Validation Loss Diverge From Epoch 1 Stack Overflow

What Does It Mean When Train And Validation Loss Diverge From Epoch 1 Stack Overflow

When Can Validation Accuracy Be Greater Than Training Accuracy For Deep Learning Models

Overfitting And Underfitting In Machine Leaning Model Performance By Itbodhi Medium

Aible Announces World S First Fully Automated Machine Learning Ai Platform For Data Scientists And Developers Machine Learning Data Scientist Data

Higher Validation Accuracy Than Training Accurracy Using Tensorflow And Keras Stack Overflow

Validation Accuracy Stagnates While Training Accuracy Improves Stack Overflow

When Can Validation Accuracy Be Greater Than Training Accuracy For Deep Learning Models

When Can Validation Accuracy Be Greater Than Training Accuracy For Deep Learning Models

Machine Learning Accuracy True Vs False Positive Negative

Post a Comment for "Machine Learning High Training Accuracy And Low Test Accuracy"